Activity Logging was introduced in Dynamics 365 version 8 and still exists today within Common Data Service. It’s in addition to the existing auditing capability that has been available in Dynamics 365 since CRM 2011.

How Activity Logging differs from existing Dynamics 365 auditing

Existing audit logging covers enabling and viewing logging for specific entities and attributes that you select. What is logged is largely based on the context of the activity. When a record is opened, activities such as who created the record, what values were entered and changed, who updated it, and so on are logged for that particular record.

Whereas Activity Logging:

- Logs user and admin activities across Office and Dynamics 365 for Customer Engagement apps.

- Occurs at the SDK layer of Dynamics 365 for Customer Engagement apps, meaning that more data is logged than just activities.

For more information about Activity Logging, review Enable and use Activity Logging

Scenario description

Activity Logging is stored in Office 365, whereas traditional audits are stored in the Dynamics 365 database. Customers might want to move the activity logs to an external source for the following reasons:

- Activity logs are retained in Office 365 for 90 days. Most companies require audit data to be stored for longer periods of time for compliance reasons. The Office Security Compliance Center provides an option to download the logs as CSV files; however, this might not be a scalable or reliable solution.

- Customers might want to move these logs out to an external data source for reporting purposes in addition to the out-of-the-box (OOB) reports available in the Office Security Compliance Center.

- Office Security Compliance Center provides the Unified Audit log to search audit logs. Users can search audit records related to SharePoint, Exchange, Azure AD and Dynamics 365 Activity Logging. There is no option to restrict the searching to Dynamics 365 activity logs. This might be a problem for some customers.

- Adding to the previous point, permissions and role requirements for using Office Security Compliance Center can be a potential problem as well.

The Office 365 global administrator role or one or more Security & Compliance Center role groups is required to search the logs.

Solution overview (proof of concept)

The Office 365 Management Activity APIs can be used to copy the activity logs to an external data source. These APIs provide information on the user, admin, system, policy actions, and events from Office 365 and Azure Active Directory (Azure AD) activity logs.

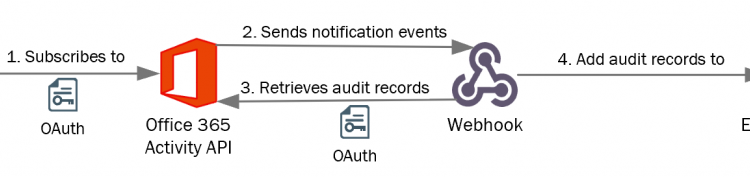

For this blog, only Activity Logging for Dynamics 365 will be taken into account. The following diagram shows the high-level architecture for the solution.

- The sole purpose of the client application is to subscribe to the Office 365 Management Activity APIs events. It makes a call to the APIs and provides the address of the webhook to receive notifications. The client application can be a postman, an Azure web app, or a Microsoft Flow. Office 365 Management Activity APIs allow subscriptions to the logs of multiple Microsoft services and if we were to subscribe to the Office 365 Management Activity API for Dynamics 365 Activity Logging, we would need to ensure that we set the ContentType to General.

The {TenantId} in the above URL represents your Office 365 tenant ID.

For more information about Office 365 Management Activity API subscriptions, see Start a subscription.

- Office 365 Management Activity APIs send notifications to the webhook when new content is available. The webhook performs the following steps:

The webhook can be implemented as a function app, a web API or a flow, and so on.

I have built a proof of concept (PoC) using Microsoft Flow as the client application and the webhook, and Azure Cosmos DB as an external storage.

I have chosen Microsoft Flow because it is a no-code option and does not require hosting as some of the other options, like Azure Functions or Azure web APIs.

Activity Logging records are retrieved as JSON objects, and Azure Cosmos DB can store them as documents without any changes. There is no schema or index management in Azure Cosmos DB and it is relatively cheaper than Azure SQL. These are some of the reasons for choosing Azure Cosmos DB as a data store.

Results

The design approach will copy the Activity Logging to Azure Cosmos DB. Users are then able to use the Azure portal to check the audit records. The following screenshot shows the audit records in the Data Explorer tab of Azure Cosmos DB. You can also use Cosmos DB explorer to manage and query the data.

Since the data is now within Azure Cosmos DB, we can then use SQL query language to retrieve data and develop reporting.

Tip: Activity Logging uses an array of name-value pair for storing field changes. To query the audit records based on the field value, use collection[Value] in the SQL query instead of collection.Value because Value is the keyword in Azure Cosmos DB. The query in the above screenshot demonstrates the same.

Building a proof of concept (PoC)

These are the steps I went through to construct a proof of concept to store Activity Logging within Azure Cosmos DB:

- Download the flows used in the solution.

- Register an app in Azure AD to access Office 365 Management Activity APIs. The client ID and the secret key of this app will be used for OAuth authentication. OAuth authentication is used in the webhook and client application flows to connect to Office 365 Management Activity APIs.

- Create an Azure Cosmos DB to store the activity logs.

- Import the webhook flow.

- Import the client application flow.

1. Download the flows

Download the following two Microsoft Flows used in the solution:

- Activity Logging blog – Subscribe: This flow is used as a client application that subscribes to the Office 365 Management Activity APIs.

- Activity Logging blog – Webhook: This flow is used as a webhook that processes the notification.

2. Register an app to access the Office 365 Management Activity APIs

The first step is to register an app to permit access to the Office 365 Management Activity API.

- Sign in to the Azure portal.

- In the left-hand navigation pane, select the Azure Active Directory service, and then select App registrations > New registration.

- Enter the information as shown in the following screen and select Register.

![Register an web app]()

- Copy the Application (client) ID. This is required to make API calls.

![App Registration step 4]()

- In the left-hand navigation pane, select the API permissions, and then select Add a permission.

![App Registration step 5]()

- Select Office 365 Management APIs.

![]()

- Select Delegated permissions, select the check box Read, and then select Add permissions.

![App Registration step 7]()

- Select Grant admin consent for ..

![]()

- In the left-hand navigation pane, select Certificates & secrets, and then select New client secret.

![App Registration step 9]()

- Copy the key because it is required for authentication. The key will be hidden permanently once you navigate away from this tile.

![App Registration step 10]()

3. Create an Azure Cosmos DB

Check the following link for instructions on how to create the Azure Cosmos DB:

https://docs.microsoft.com/en-us/azure/cosmos-db/create-sql-api-dotnet#create-account

We have created a database named Audit and a container named Audits with UserId as the partition key.

4. Import the webhook flow

- The proof of concept is using Microsoft Flow as a webhook to receive notifications in this solution. Import the flow from the zip file downloaded in step 1. Check the link for instructions: https://flow.microsoft.com/en-us/blog/import-export-bap-packages/.

- Open the flow in Edit mode.

![webhook step 2]()

- Open step 1 – When a HTTP request is received and copy the HTTP POST URL. The URL will be used as a webhook address to subscribe to the notifications in the client application Microsoft Flow.

![Webhook step 3]()

- Replace the values for TenantId, ClientId, and Secret variables in steps 2, 3, and 4. The TenantId is your Azure Tenant ID and ClientId and Secret come from the Register an app to access Office 365 Management Activity APIs heading above.

- Open step 6 and Add new connection for your Azure Cosmos DB database.

![webhook step 5]()

- Save the Flow.

Note: This flow is using a Response action before step 6 (processing of audit records). There are two reasons behind it:

- When you subscribe for notifications, the Office 365 Management Activity APIs expect an HTTP 200 response back. Because this flow is written to retrieve the blob URI contents and process them, it will fail. So, I have pushed the response step before processing in step 6.

- The webhook notification can have multiple content URIs, and those content URIs have a collection of audit records. It is possible that the Office 365 API connection will close before the webhook processes all the records and this will cause the flow to fail.

5. Import the client application flow

The PoC uses Microsoft Flow as a client application that calls Office 365 Management Activity APIs to subscribe to the Activity Logging notification.

- Import the flow from the zip file downloaded in step 1. https://flow.microsoft.com/en-us/blog/import-export-bap-packages/.

- Open the flow in Edit mode.

![Subscription step 2]()

- Replace the values for variables TenantId, ClientId, and Secret in steps 2, 3, and 4. The TenantId is your Azure Tenant ID and ClientId and Secret come from the Register an app to access Office 365 Management Activity APIs heading above.

- Replace the value of variable WebhookAddress with the HTTP URL POST copied from the webhook flow.

- Save and run the flow. If the subscription is successful, you will receive the following response for step 6.

![Subscription step 5]()

Taking it further

This PoC should be used as a template. Its not a production-ready solution.

- Some customers might want to consider the webhook just as a notification receiver and run the processing in a separate flow.

- Extending on point 1, customers might want to push the audit collection into an Azure Service Bus queue and then process the records for scalability and reliability. The following diagram has been taken from the work that my colleague Simon Matthews is doing on Activity Logging.

![Taking it further]()

- There is one step missing in the above solution. In step 6 of the webhook flow, when the flow makes an HTTP request to retrieve the audit collection from the content URI, if the number of records in the blob is too many, then the response might not send all the records and send a URL for the next page in the response header (NextPageUri). If this is the case, customers might want to implement paging. My test environment does not have that many activity log records to implement this. Please check the pagination section on the Activity APIs page:

https://docs.microsoft.com/office/office-365-management-api/office-365-management-activity-api-reference

We hope you find this approach a useful scenario to show how you can copy activity logs out of Office 365.

Thank you for your interest in the topic and feel free to comment and provide your feedback.

Amreek Singh

FastTrack Solution Architect

Microsoft Dynamics 365 Customer Engagement

The post Storing Common Data Service activity logs outside of Office 365 appeared first on Microsoft Dynamics 365 Blog.